Pk nextGen: Difference between revisions

| (14 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

'''What we know for sure:''' | |||

What we know for sure: | |||

1. MikeC is to develop a PIKA-esque tool for multi-dimensional data from TofDAQ data files. | 1. MikeC is to develop a PIKA-esque tool for multi-dimensional data from TofDAQ data files. | ||

| Line 9: | Line 6: | ||

<br>4. Whatever gets written should address existing desires and concerns with the PIKA code, and be fully transparent so as to accept any input data type. It should be developed with memory and speed optimisation in mind. | <br>4. Whatever gets written should address existing desires and concerns with the PIKA code, and be fully transparent so as to accept any input data type. It should be developed with memory and speed optimisation in mind. | ||

'''Who will use this software?''' | |||

1. PIKA users, via careful integration of new software to mate with existing PIKA construct<br> | |||

2. Aerodyne-based Tofwerk-TOF-users community<br> | |||

3. TW prototype instrument application developers<br> | |||

4. Other TW OEM customers, probably via libraries (mapped from the template this Igor code will provide)<br> | |||

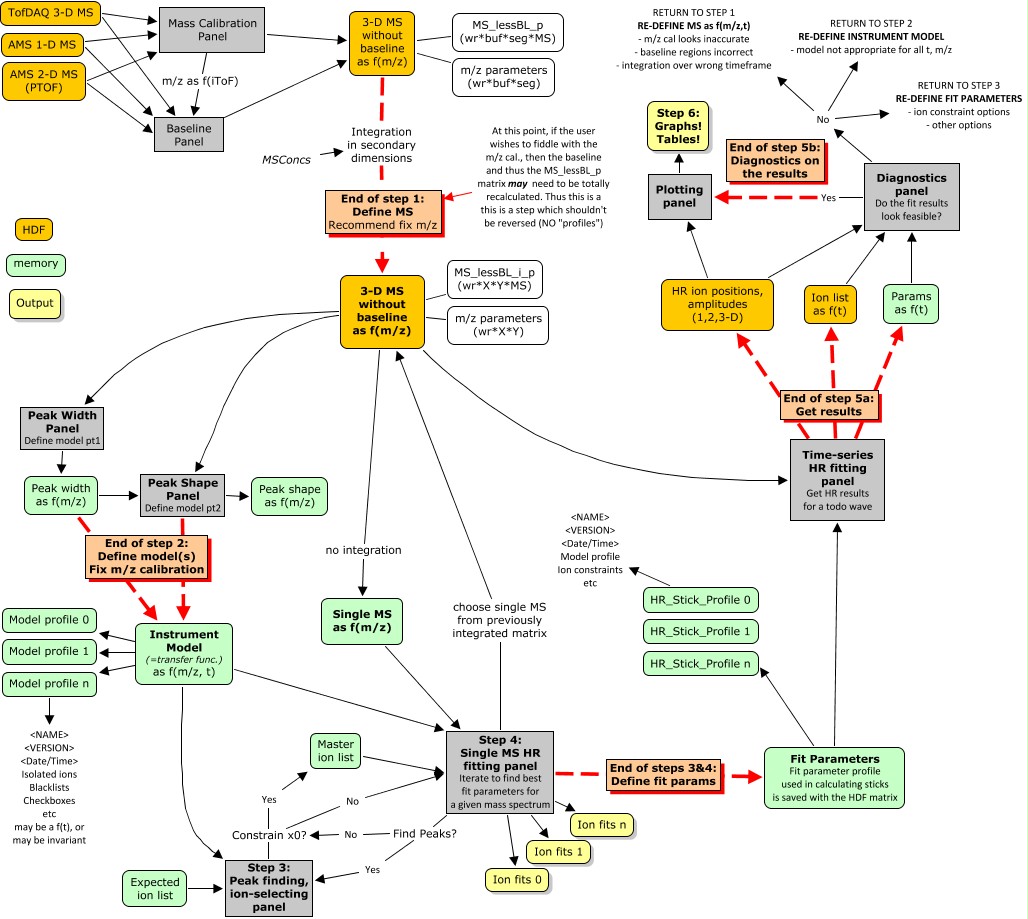

=== | =Software map= | ||

[[File:2010-10-08_HRconceptmap.jpg]] | |||

==Step 1: Define ''MS Matrix''== | |||

The user first runs through | |||

* m/z calibration as f(t) | |||

* Baseline determination as f(t) | |||

At this point, the user generates an HDF dataset containing the raw multi-dimensional MS matrix on which the entire HR-fitting process will run. This will include | |||

* integration in secondary dimensions (not writes/runs) | |||

* saving the raw data and the baseline-subtracted data | |||

Note that it is '''not''' prudent to allow multiple copies of the mass cal. and bsl params to pevade through the HR-fitting, as these affect the HDF matrix directly and thus must be considered FIXED during HR-fitting. We thus save the date/time of creation of this matrix as an associated HDF attribute, for comparison with outputs in steps 2-5. We need '''some method to flag the m/z cal and bsl params''', such that if the user changes them, the remaining steps in this HR-fitting process are immediately aware, and force a re-start from step 1. | |||

** '''Important caveat''': Steps 1&2 can be iterated, thus we only ''recommend'' fixing m/z at step 2, and force thereafter. | |||

==Step 2: Define model== | |||

* | Identical process to PIKA peak width/shape determination, with option to save multiple copies of the output instrument model. In each copy: | ||

** | * Peak width&shape as f(m/z, t) | ||

* | ** The model will certainly change as a f(m/z). It may change as a f(t). | ||

* | * Name and version (so if updated, time-series can tell) | ||

* Date/Time of creation (compare to MS-matrix... if older, the model is flagged as VOID - really IMPORTANT) | |||

==Step 3: Define fit parameters (via SingleMS panel)== | |||

TW panel will be inherently more complex than PIKA, which can retain some default options for this section 'under the hood', so to speak. | |||

* Choose a slice from the integrated, calibrated, baseline-subtracted MS matrix calculated in step 1. With this single MS: | |||

** Choose an instument model | |||

** Choose ion contraint options: | |||

*** 1. Constrain the ion list (cf PIKA). '''Directly edit''' a master list | |||

*** 2. Find peaks in the MS, perhaps using initial guesses. '''Generate''' a master list | |||

*** 3. Don't constrain the ion list in the fits. '''Reference''' a master list (which could be an exact mass finder) so as to to give meaning to the fit results | |||

*** 4. Combination. Find peaks (2) but then allow the x0 to vary in the fits | |||

** Choose isotopic constraints if appropriate | |||

** Choose other fit parameters | |||

* Display results | |||

* Re-evaluate parameters and calculate new results. Intercompare. | |||

* '''Choose one set of fit parameters for time-series calculations''' | |||

== | ==Step 4: Get results== | ||

Use parameters from step 3 to generate time-series ion fits for a todo-wave. Save, as f(t): | |||

* HDF: Ions fitted | |||

* | ** Exact mass | ||

* | ** Chemical formula - if not constraining the list, this is a guess! | ||

** | * HDF: Ion x0 - if allowed to vary | ||

* | * HDF: Ion amplitude | ||

** | * Mem: Fit Parameters as f(runs), thru named HR_Sticks_Profile | ||

** | ** Instrument model profile name and version (so if updated, can tell) | ||

* | ** Creation date/time of this instrument model | ||

** | ** Creation date/time of MS matrix used in the fits | ||

** | ** Ion constraint options | ||

** Checkboxes etc from singleMS panel that influence the fits | |||

** Name of HDF waves saved | |||

==Step 5: Diagnostics== | |||

* | Crunch point for the user. If things look bad, they have to return to a previous step: | ||

* The | * '''Return to step 1:''' oh dear | ||

** the | ** User believes there is a problems with | ||

* The | *** mass calibration | ||

*** baseline subtraction | |||

* | *** MS integration regions (cf Donna's PTOF regions) | ||

** Altering any of these will alter the MS Matrix... voiding the instrument model. The user has to '''start all over again'''. | |||

* '''Return to step 2:''' model issues | |||

** The user believes there is a problem with | |||

*** a particular model profile used in one of the runs | |||

** Altering this voids the model, and possibly therefore the ion lists etc. The user must re-evaluate those runs calculated using this model | |||

** NOTE: If the user thinks they should instead have used a different model profile, they could just re-calculate at this point, caveating that this does not afford them to chance to check the ion lists again. This is essentially one of the options in the next bullet | |||

* '''Return to step 3:''' fit params look bad | |||

** The user believes there are problems with | |||

*** the ion constraint options, master lists | |||

*** the isotopic constraint options | |||

*** the fit options employed | |||

*** the instrument model profile chosen for each run | |||

** Altering any of these changes the HR sticks, and thus the users must choose again (more wisely) and re-calculate | |||

=Changes from and similarities to PIKA= | |||

==Being kept analagous to PIKA== | |||

* Re-requisite of 'SQUIRREL'-steps '''m/z and baseline''' before HR-fitting commences | |||

* Isotopic constraints. We always require all unit masses to be fitted together, in the correct order. | |||

* Master list option. | |||

* Time-series changes in the instrument model (tho the method changes). | |||

* Stages where diagnostics are assessed. Points at which the user has to revert if changes made... even if this is not apparent currently! | |||

==Changed from PIKA== | |||

* Multi-dimensional. Although points in this discussion may not explicitly refer to it, the entire process must be capable of acting on a 3-D dataset. | |||

** Caveats: We allow the model profile and fit-parameters chosen for the time-series to vary on a run-by-run basis ONLY. This is to keep to the todo-indexing system (and force users to save appropriately with TofDAQ!!). | |||

* Handling of instrument model profiles: | |||

** Individual profiles can be saved. Time-series calculations act on profile names, not PWPS parameters. | |||

* Single MS analysis. | |||

** Intercomparison of multiple sets of fits, using different | |||

*** MS slices from the matrix | |||

*** model profiles | |||

*** fit parameters (ion constraints etc) | |||

** Additional options for ion constraints | |||

*** Master list is an option, not a given | |||

*** Peak-finding routine can be used to generate a master list from a given MS-model-param combination | |||

*** x0 can be allowed to vary in the minimisation (ie no constraints at all)... assigning found exact masses to chemical formulae after-the-fact | |||

* MSConcs writes to HDF (in step 1) | |||

= | =Questions and discussion points= | ||

* How to flag the m/z calibration and baseline parameter determination? The HR-fitting routine needs to know | |||

** when these were calculated, and | |||

** if it was thus prior to the calculation of the MS Matrix | |||

** '''if not''', then we need to force the user to start over at step 1 | |||

* Should we save m/z and bsl params with the MS matrix? | |||

** Should not be entirely necessary if we keep track of date/time... plus, it will be huge | |||

* How to implement the single MS intercomparison analysis? Got to keep track of | |||

** MS slice used from the matrix | |||

** instrument model | |||

** ion constraints (and/or chosen ions to fit) | |||

** therefore we need to brainstorm the interface to make this happen | |||

* Different datasets: | |||

** Have not put much in this discussion about the use of differnet datasets (eg open/closed). Clearly need this capability. | |||

** Instrument model should be easily able to handle these since we are coding in profiles | |||

** If, for e.g., the open m/z is altered, forcing the user to step1 for the open HR sticks, we should probably retain the ability to note that the closed HR sticks are not void, right?! | |||

* Parameter Profiles? | |||

** Much talk about instrument model profiles... what about for the fit parameters? Do we get the user to build up a (named, versioned, dated) profile containing: | |||

*** Ion constraint options | |||

*** Isotopic options | |||

*** Other fit options | |||

*** Model profile name | |||

*** Slice used from MS Matrix in the single MS analysis, and which dataset | |||

** '''Advantage would be''' that we don't save a bunch of stuff in the HR_Sticks HDF, but rather a 'parameter-profile' name for each todo-wave | |||

* Use of profiles: | |||

** Assuming that the use of the profiles arises through todo-waves... so, rather than defining which profile to use as f(runs), we just save which WAS used as f(runs).... its a subtle but important difference. | |||

* Advanced options: | |||

** Coding in advanced option to save multiple copies of HR_Sticks for a given dataset. | |||

* Linking of ion lists | |||

** If using a master list... could flag the ions used in m/z, PW, PS, fits in analgous manner. | |||

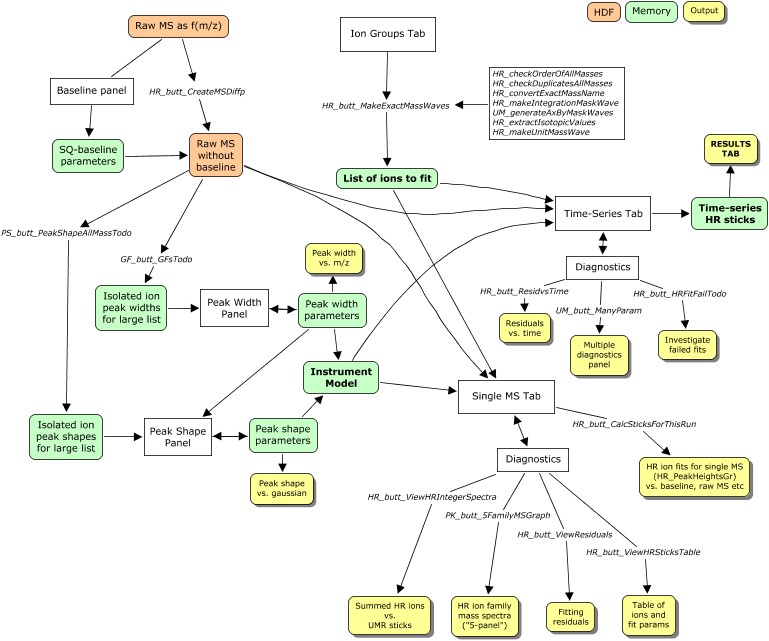

= | = Existing PIKA fitting process: steps = | ||

[[File:2010-09-21 MJC PIKAconceptmap.jpg]] | [[File:2010-09-21 MJC PIKAconceptmap.jpg]] | ||

==1. Preparation for HR fitting== | |||

The order of preparatory steps below is generally not modifiable. | The order of preparatory steps below is generally not modifiable. | ||

''(MJC: comments added for changes required in the TW version)'' | ''(MJC: comments added for changes required in the TW version)'' | ||

| Line 170: | Line 168: | ||

(1E) Select a subset of HR ions in (1D) to be constrained.<br>Constrained means that the fitting routine does not ‘fit’ this HR ion – the peak height is fixed to a value based on the magnitude of the HR ion’s isotopic ‘parent’ that has been previously fit or determined. This has consequences about the order in which UMR sets of HR ions at on m/z are fit. | (1E) Select a subset of HR ions in (1D) to be constrained.<br>Constrained means that the fitting routine does not ‘fit’ this HR ion – the peak height is fixed to a value based on the magnitude of the HR ion’s isotopic ‘parent’ that has been previously fit or determined. This has consequences about the order in which UMR sets of HR ions at on m/z are fit. | ||

==2. Perform HR fitting== | |||

In the current AMS HR code the HR fitting is performed at one UMR set of HR ions (all chosen HR ions at an individual integer m/z range). For example at nominal m/z 18 the HR ions 18O+, H2O+, 15NH3 at 17.999161, 18.010559, 18.023581 are fit in one ‘set’. Typically isotopic HR ions are constrained, and hence in this case the magnitude of the HR ions of O+ and NH3+ would need to be determined from sets of HR ions at nominal m/z at 16 and 17 before the set of HR ions at 18 would be found. In future applications ToF mass spec applications of high organic fragments (>250m/zish?) with a positive mass defect the division into nominal m/z sets of HR ions could be problematic. | In the current AMS HR code the HR fitting is performed at one UMR set of HR ions (all chosen HR ions at an individual integer m/z range). For example at nominal m/z 18 the HR ions 18O+, H2O+, 15NH3 at 17.999161, 18.010559, 18.023581 are fit in one ‘set’. Typically isotopic HR ions are constrained, and hence in this case the magnitude of the HR ions of O+ and NH3+ would need to be determined from sets of HR ions at nominal m/z at 16 and 17 before the set of HR ions at 18 would be found. In future applications ToF mass spec applications of high organic fragments (>250m/zish?) with a positive mass defect the division into nominal m/z sets of HR ions could be problematic. | ||

===(2A) Single MS high-resolution stick calculation & diagnostics=== | |||

Before a user spends a lot of computation time generating HR sticks, it is beneficial to examine HR fits of few raw spectra at periods of various concentrations and compositions. This involves visually inspecting each UMR m/z region of interest and significant signal. This visual inspection gives a user feedback on all the parameters that go into the fit: m/z calibration, baseline settings, PW, PS, selection of HR ions to fit. It is often at this stage where at least one of the input parameters of the HR fit requires fine-tuning. | Before a user spends a lot of computation time generating HR sticks, it is beneficial to examine HR fits of few raw spectra at periods of various concentrations and compositions. This involves visually inspecting each UMR m/z region of interest and significant signal. This visual inspection gives a user feedback on all the parameters that go into the fit: m/z calibration, baseline settings, PW, PS, selection of HR ions to fit. It is often at this stage where at least one of the input parameters of the HR fit requires fine-tuning. | ||

Other diagnostics for single spectra include: | Other diagnostics for single spectra include: | ||

| Line 182: | Line 180: | ||

* Mike’s HR ion sensitivity tool. | * Mike’s HR ion sensitivity tool. | ||

===(2B) High-resolution stick calculation for many spectra=== | |||

As the AMS HR code currently exists in version 1.09, the HR fitting results are only saved in intermediate files for future access via subsequent ‘fetch’ commands. It is beneficial for users to have a place to ‘play’ while keeping the HR fitting results from being modified. | As the AMS HR code currently exists in version 1.09, the HR fitting results are only saved in intermediate files for future access via subsequent ‘fetch’ commands. It is beneficial for users to have a place to ‘play’ while keeping the HR fitting results from being modified. | ||

==3. Organizing, displaying HR stick results & diagnostics== | |||

===(3A) Organization (order of steps important here)=== | |||

(3Ai) Define HR families<br>It will always be convenient to group HR ions into families. A family can be defined explicitly by listing its members (i.e. family Cx =C+, C2+, C3+, etc) or by an algorithm that parses the chemical formula of each HR ion. The AMS HR code currently wants families to be determined at the same time as the selection of the HR ions to fit. However, this is unnecessary and future versions will allow more flexibility. | (3Ai) Define HR families<br>It will always be convenient to group HR ions into families. A family can be defined explicitly by listing its members (i.e. family Cx =C+, C2+, C3+, etc) or by an algorithm that parses the chemical formula of each HR ion. The AMS HR code currently wants families to be determined at the same time as the selection of the HR ions to fit. However, this is unnecessary and future versions will allow more flexibility. | ||

| Line 193: | Line 191: | ||

(3Aiii) Define HR frag entries<br>HR frag entries explicitly states the mathematical treatment of any/all specialized considerations of HR ion, whether the HR ion was fit or not. | (3Aiii) Define HR frag entries<br>HR frag entries explicitly states the mathematical treatment of any/all specialized considerations of HR ion, whether the HR ion was fit or not. | ||

===(3B) Display=== | |||

Users will require typical output: time series, mass spectra summed or averaged in user-defined ways, and plotted in a variety of formats. What is different from a UMR analysis is that user have an additional type of entity: HR families as well as individual HR ions, unit resolution summed values, and HR species. | Users will require typical output: time series, mass spectra summed or averaged in user-defined ways, and plotted in a variety of formats. What is different from a UMR analysis is that user have an additional type of entity: HR families as well as individual HR ions, unit resolution summed values, and HR species. | ||

===(3C) Diagnostics=== | |||

All the diagnostics outlined in 2A above, and with a time (or other) dimension will be required by users. | All the diagnostics outlined in 2A above, and with a time (or other) dimension will be required by users. | ||

Latest revision as of 00:11, 8 October 2010

What we know for sure:

1. MikeC is to develop a PIKA-esque tool for multi-dimensional data from TofDAQ data files.

2. The non-AMS data format and multi-dimensional aware criteria forces a re-write of the HR code rather than straight adoption of existing PIKA

3. Other TW (non-AMS) applications will wish to use many existing PIKA features (not limited to PWPS, HRfrag, families, isotopic constraints), but others will be less applicable (obviously, the AMS-centric parts!).

4. Whatever gets written should address existing desires and concerns with the PIKA code, and be fully transparent so as to accept any input data type. It should be developed with memory and speed optimisation in mind.

Who will use this software?

1. PIKA users, via careful integration of new software to mate with existing PIKA construct

2. Aerodyne-based Tofwerk-TOF-users community

3. TW prototype instrument application developers

4. Other TW OEM customers, probably via libraries (mapped from the template this Igor code will provide)

Software map

Step 1: Define MS Matrix

The user first runs through

- m/z calibration as f(t)

- Baseline determination as f(t)

At this point, the user generates an HDF dataset containing the raw multi-dimensional MS matrix on which the entire HR-fitting process will run. This will include

- integration in secondary dimensions (not writes/runs)

- saving the raw data and the baseline-subtracted data

Note that it is not prudent to allow multiple copies of the mass cal. and bsl params to pevade through the HR-fitting, as these affect the HDF matrix directly and thus must be considered FIXED during HR-fitting. We thus save the date/time of creation of this matrix as an associated HDF attribute, for comparison with outputs in steps 2-5. We need some method to flag the m/z cal and bsl params, such that if the user changes them, the remaining steps in this HR-fitting process are immediately aware, and force a re-start from step 1.

- Important caveat: Steps 1&2 can be iterated, thus we only recommend fixing m/z at step 2, and force thereafter.

Step 2: Define model

Identical process to PIKA peak width/shape determination, with option to save multiple copies of the output instrument model. In each copy:

- Peak width&shape as f(m/z, t)

- The model will certainly change as a f(m/z). It may change as a f(t).

- Name and version (so if updated, time-series can tell)

- Date/Time of creation (compare to MS-matrix... if older, the model is flagged as VOID - really IMPORTANT)

Step 3: Define fit parameters (via SingleMS panel)

TW panel will be inherently more complex than PIKA, which can retain some default options for this section 'under the hood', so to speak.

- Choose a slice from the integrated, calibrated, baseline-subtracted MS matrix calculated in step 1. With this single MS:

- Choose an instument model

- Choose ion contraint options:

- 1. Constrain the ion list (cf PIKA). Directly edit a master list

- 2. Find peaks in the MS, perhaps using initial guesses. Generate a master list

- 3. Don't constrain the ion list in the fits. Reference a master list (which could be an exact mass finder) so as to to give meaning to the fit results

- 4. Combination. Find peaks (2) but then allow the x0 to vary in the fits

- Choose isotopic constraints if appropriate

- Choose other fit parameters

- Display results

- Re-evaluate parameters and calculate new results. Intercompare.

- Choose one set of fit parameters for time-series calculations

Step 4: Get results

Use parameters from step 3 to generate time-series ion fits for a todo-wave. Save, as f(t):

- HDF: Ions fitted

- Exact mass

- Chemical formula - if not constraining the list, this is a guess!

- HDF: Ion x0 - if allowed to vary

- HDF: Ion amplitude

- Mem: Fit Parameters as f(runs), thru named HR_Sticks_Profile

- Instrument model profile name and version (so if updated, can tell)

- Creation date/time of this instrument model

- Creation date/time of MS matrix used in the fits

- Ion constraint options

- Checkboxes etc from singleMS panel that influence the fits

- Name of HDF waves saved

Step 5: Diagnostics

Crunch point for the user. If things look bad, they have to return to a previous step:

- Return to step 1: oh dear

- User believes there is a problems with

- mass calibration

- baseline subtraction

- MS integration regions (cf Donna's PTOF regions)

- Altering any of these will alter the MS Matrix... voiding the instrument model. The user has to start all over again.

- User believes there is a problems with

- Return to step 2: model issues

- The user believes there is a problem with

- a particular model profile used in one of the runs

- Altering this voids the model, and possibly therefore the ion lists etc. The user must re-evaluate those runs calculated using this model

- NOTE: If the user thinks they should instead have used a different model profile, they could just re-calculate at this point, caveating that this does not afford them to chance to check the ion lists again. This is essentially one of the options in the next bullet

- The user believes there is a problem with

- Return to step 3: fit params look bad

- The user believes there are problems with

- the ion constraint options, master lists

- the isotopic constraint options

- the fit options employed

- the instrument model profile chosen for each run

- Altering any of these changes the HR sticks, and thus the users must choose again (more wisely) and re-calculate

- The user believes there are problems with

Changes from and similarities to PIKA

Being kept analagous to PIKA

- Re-requisite of 'SQUIRREL'-steps m/z and baseline before HR-fitting commences

- Isotopic constraints. We always require all unit masses to be fitted together, in the correct order.

- Master list option.

- Time-series changes in the instrument model (tho the method changes).

- Stages where diagnostics are assessed. Points at which the user has to revert if changes made... even if this is not apparent currently!

Changed from PIKA

- Multi-dimensional. Although points in this discussion may not explicitly refer to it, the entire process must be capable of acting on a 3-D dataset.

- Caveats: We allow the model profile and fit-parameters chosen for the time-series to vary on a run-by-run basis ONLY. This is to keep to the todo-indexing system (and force users to save appropriately with TofDAQ!!).

- Handling of instrument model profiles:

- Individual profiles can be saved. Time-series calculations act on profile names, not PWPS parameters.

- Single MS analysis.

- Intercomparison of multiple sets of fits, using different

- MS slices from the matrix

- model profiles

- fit parameters (ion constraints etc)

- Additional options for ion constraints

- Master list is an option, not a given

- Peak-finding routine can be used to generate a master list from a given MS-model-param combination

- x0 can be allowed to vary in the minimisation (ie no constraints at all)... assigning found exact masses to chemical formulae after-the-fact

- Intercomparison of multiple sets of fits, using different

- MSConcs writes to HDF (in step 1)

Questions and discussion points

- How to flag the m/z calibration and baseline parameter determination? The HR-fitting routine needs to know

- when these were calculated, and

- if it was thus prior to the calculation of the MS Matrix

- if not, then we need to force the user to start over at step 1

- Should we save m/z and bsl params with the MS matrix?

- Should not be entirely necessary if we keep track of date/time... plus, it will be huge

- How to implement the single MS intercomparison analysis? Got to keep track of

- MS slice used from the matrix

- instrument model

- ion constraints (and/or chosen ions to fit)

- therefore we need to brainstorm the interface to make this happen

- Different datasets:

- Have not put much in this discussion about the use of differnet datasets (eg open/closed). Clearly need this capability.

- Instrument model should be easily able to handle these since we are coding in profiles

- If, for e.g., the open m/z is altered, forcing the user to step1 for the open HR sticks, we should probably retain the ability to note that the closed HR sticks are not void, right?!

- Parameter Profiles?

- Much talk about instrument model profiles... what about for the fit parameters? Do we get the user to build up a (named, versioned, dated) profile containing:

- Ion constraint options

- Isotopic options

- Other fit options

- Model profile name

- Slice used from MS Matrix in the single MS analysis, and which dataset

- Advantage would be that we don't save a bunch of stuff in the HR_Sticks HDF, but rather a 'parameter-profile' name for each todo-wave

- Much talk about instrument model profiles... what about for the fit parameters? Do we get the user to build up a (named, versioned, dated) profile containing:

- Use of profiles:

- Assuming that the use of the profiles arises through todo-waves... so, rather than defining which profile to use as f(runs), we just save which WAS used as f(runs).... its a subtle but important difference.

- Advanced options:

- Coding in advanced option to save multiple copies of HR_Sticks for a given dataset.

- Linking of ion lists

- If using a master list... could flag the ions used in m/z, PW, PS, fits in analgous manner.

Existing PIKA fitting process: steps

1. Preparation for HR fitting

The order of preparatory steps below is generally not modifiable. (MJC: comments added for changes required in the TW version)

(1A) Get good m/z calibration parameters. Mike has this code in place for Tofwerk files.

(1B) Get good baseline-removed spectra.

The purpose of saving copies of the raw spectra with the baseline removed is so that the multipeak fitting is done on the ‘same’ spectra. This insures that the calculation of any baseline isn’t dependent on settings (‘resolution’ interpolations parameters) that could be adjusted by the user and then not saved, not

recorded, an hence not replicable.

MJC: Something to consider: should the dimensional averaging be performed at this stage? This would limit the user to the prescribed dimensional bases, but facilitate the sped-up analysis currently available in PIKA.??

DTS: Yes, you are correct. This is the place to generate the spectra that will be fit. I think it important that the PW and PS calcs are based on the same spectra that will be fit.

(1C) Get good peak width (PW), peak shape (PS).

Getting good values for these parameters is necessarily an iterative process. In general one looks at 100s of sets of isolated HR ions (i.e. C4H9+, etc ) spectra to get good statistics for PW and PS. Once a user is confident that selected HR ions are behaving in a consistent and ‘smooth’ manner, one can set the PW and even PS on an individual run basis if needed.

MJC: TW product will require a more generalised version of the current code, which hard-wires in AMS-specific ions...

DTS: Yes the current list of HR ions fit with gaussians is pre-determined, but currently users can add to the list. An import/export list of HR ions to be fit with gaussians is necessary. While on the topic, Jose had always urged that the various lists of ions be linked/merged somehow. That is, from the main list of all ions, there would be flags for each HR ion indicating subsets used for m/z calibration, peak width, peak shape.

(1D) Select the HR ions to fit.

This is highly variable depending on the application. Similar to Mike’s m/z calibration routine, I envision a simple interface whereby a user imports settings appropriate to their type of application.

MJC: Since the UM now I'm wondering about de-constraining the list, too.... hmmm

DTS: I'm not clear what deconstraining the list means. I envision a single prompt to the user at the start of the experiment that would load in their HR ion settings (with flags for m/z calibrations, gaussian fits, peak width, peak shape).

(1E) Select a subset of HR ions in (1D) to be constrained.

Constrained means that the fitting routine does not ‘fit’ this HR ion – the peak height is fixed to a value based on the magnitude of the HR ion’s isotopic ‘parent’ that has been previously fit or determined. This has consequences about the order in which UMR sets of HR ions at on m/z are fit.

2. Perform HR fitting

In the current AMS HR code the HR fitting is performed at one UMR set of HR ions (all chosen HR ions at an individual integer m/z range). For example at nominal m/z 18 the HR ions 18O+, H2O+, 15NH3 at 17.999161, 18.010559, 18.023581 are fit in one ‘set’. Typically isotopic HR ions are constrained, and hence in this case the magnitude of the HR ions of O+ and NH3+ would need to be determined from sets of HR ions at nominal m/z at 16 and 17 before the set of HR ions at 18 would be found. In future applications ToF mass spec applications of high organic fragments (>250m/zish?) with a positive mass defect the division into nominal m/z sets of HR ions could be problematic.

(2A) Single MS high-resolution stick calculation & diagnostics

Before a user spends a lot of computation time generating HR sticks, it is beneficial to examine HR fits of few raw spectra at periods of various concentrations and compositions. This involves visually inspecting each UMR m/z region of interest and significant signal. This visual inspection gives a user feedback on all the parameters that go into the fit: m/z calibration, baseline settings, PW, PS, selection of HR ions to fit. It is often at this stage where at least one of the input parameters of the HR fit requires fine-tuning. Other diagnostics for single spectra include:

- Comparison of HR summed to UMR vs. UMR sticks.

- Residuals from the HR fits.

- “5-panel” graph... Some sort of UMR, family summarized spectra plots. This is more important for EI than for soft or other ionization techniques.

- Tabulated results. Having easy access to the HR sticks in a table is useful for those wishing to check the math or perform their own subsequent calculations.

- Mike’s HR ion sensitivity tool.

(2B) High-resolution stick calculation for many spectra

As the AMS HR code currently exists in version 1.09, the HR fitting results are only saved in intermediate files for future access via subsequent ‘fetch’ commands. It is beneficial for users to have a place to ‘play’ while keeping the HR fitting results from being modified.

3. Organizing, displaying HR stick results & diagnostics

(3A) Organization (order of steps important here)

(3Ai) Define HR families

It will always be convenient to group HR ions into families. A family can be defined explicitly by listing its members (i.e. family Cx =C+, C2+, C3+, etc) or by an algorithm that parses the chemical formula of each HR ion. The AMS HR code currently wants families to be determined at the same time as the selection of the HR ions to fit. However, this is unnecessary and future versions will allow more flexibility.

(3Aii) Define HR batch entities

In AMS parlance a batch entity is typically a species, like organics, nitrate, etc. While the grouping of HR ions into families is convenient, it will always be the case that the chemical information a user desires may require a mathematical or specialized treatment of the HR fit results beyond the simply family sorting mechanism. A common AMS example is the parsing of the OH+ signal between the water and the organic species. Every HR species is defined first by two items: (a) a list of families and (b) a frag wave which identifies any modifications based on individual HR ions (such as the OH example above).

(3Aiii) Define HR frag entries

HR frag entries explicitly states the mathematical treatment of any/all specialized considerations of HR ion, whether the HR ion was fit or not.

(3B) Display

Users will require typical output: time series, mass spectra summed or averaged in user-defined ways, and plotted in a variety of formats. What is different from a UMR analysis is that user have an additional type of entity: HR families as well as individual HR ions, unit resolution summed values, and HR species.

(3C) Diagnostics

All the diagnostics outlined in 2A above, and with a time (or other) dimension will be required by users.